Definition

Evaluation is

- the systematic collecting, analyzing and reporting of information

- about an audience’s knowledge, attitudes, skills, intentions and/or behaviors

- regarding specific content, issues or experiences

- for the purpose of making informed decisions about programming. (adapted from Patton, 1997)

Since the early 1990s federal and state agencies, as well as foundations, have been focusing more and more on performance-based budgeting. Government agencies are required by law to identify performance measures (also called indicators) and document outcomes and impacts based on those indicators for the purpose of decision making—primarily funding.

As a result project providers, such as those supported by NOAA’s California B-WET, are asked to evaluate their outcomes and impacts. Some of you may be familiar with traditional indicators: the number of people served, or amount of contact time, etc. Those indicators are now viewed as outputs and are no longer sufficient measures of project success. The focus has turned to outcomes—how the people served by a project have changed as a result of their engagement with the project.

All evaluations must be systematic and strategic: systematic in that they capture data from a representative audience/participant sample (or the entire population) in a systematic manner that enables the reporting of results (see the definition above); strategic in that they measure what’s important to measure given the available resources.

Purpose

Your evaluation results (and hence your report) serve two purposes. An evaluation, by definition, provides information for making decisions about programming. Therefore the primary purpose of evaluation results is to provide decision makers (usually program staff) with enough information to help them make project design and delivery decisions without overwhelming them with details. From your evaluation you could:

- learn about your audience’s needs, knowledge, abilities, current practices, etc.

- improve the project so it matches staff abilities and resources while meeting audience needs, abilities, etc.

- determine if your audience is following through, that is, using project materials and/or skills, or

- determine the impact of your project: How is the audience different? How has the community changed?

The secondary purpose is to assist funders in assessing if the funds were well spent—your project’s merit and worth. With that purpose in mind, when you report results you need to include enough information so that someone unfamiliar with the project can read the report and understand the purpose of your evaluation, the issues/questions the evaluation addressed, the audience from whom you collected data, and what those data (the results) say about the impacts of your project.

Evaluation Stages & Types

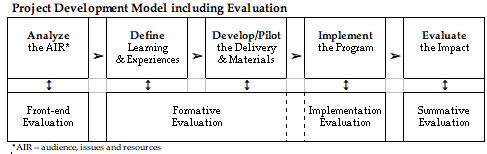

Depending on the timing of the evaluation and the decisions that the results are to inform, evaluation goes by different names. It’s important to know which stage you’re in because that’ll help you formulate your evaluation questions. These stages coincide with the project development processes as illustrated below.

Front-end Evaluation (including needs assessment)

Front-end evaluation is conducted during at the beginning of a project and gathers data about an audience’s current knowledge, skills, behaviors and attitudes. This evaluation information is used to develop goals and objectives, assess prior knowledge and misinformation. Needs assessment is often used synonymously, but tends to be narrower in scope.

Formative Evaluation

Formative evaluation is conducted as you develop your project and gathers data about audience’s reactions to and learning from draft materials or pilot activities. This evaluation information is used to make changes to improve the project.

Implementation (process) Evaluation

Implementation evaluation is conducted after a project has been implemented and gathers data about how the materials or activities are actually being used. Understanding this before a summative evaluation is important because projects that aren’t implemented as designed may not result in the intended outcomes. This evaluation information is used to correct the implementation or accommodate any issues in the delivery and intended outcomes.

Summative Evaluation

Summative evaluation is conducted at the end of a project to gather data about how well your project meets its objectives/outcomes. This evaluation information informs administrators and funders about your success or impact—how your audience is different as a result of your project.

Levels of Evaluation

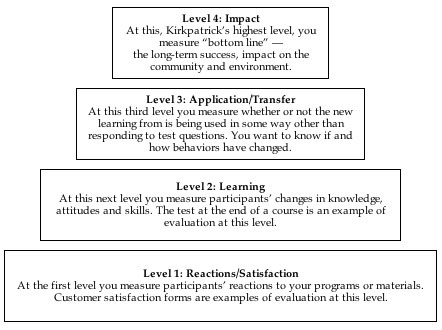

According to one model (Kirkpatrick, 1994) there are four distinct levels of evaluation and a project evaluation should begin with level one, and then, as time and budget allow, move sequentially through levels two, three, and four. Information from each prior level serves as a base for the next level's evaluation. Kirkpatrick’s four levels of evaluation are

Your project could be successful at any or all levels-from how much teachers like the materials to what students learn to how engaged participants become in restoration activities to how your community views its local environment. Each successive level represents a more precise measure of the effectiveness of your project. Ideally, all projects should attempt to measure all levels, however, because the higher levels require more rigor, more time and greater resources, they often go unmeasured.

Note: In addition to evaluation questions related to one or more of these levels, you may also want to include demographic or other background questions to learn more about your audience and make comparisons of different groups within your audience, such as the different grade level of students, or teachers' technology skill levels, or participants' gender or ethnicity or ages, etc.

For examples of the types of questions you might ask at each level, click here.